GPT-3: A Chatbot Unleashed

January 21, 2023

I’ll preface this by saying I don’t like AI tools all that much. But after looking at OpenAI’s GPT-3 language model, I can certainly say I’m interested in AI tools. Most of my problems with them come from ethical issues, like how AI art algorithms are trained off digital art without the consent of artists.

But I promised myself that today would be mostly about GPT-3. Available freely through OpenAI’s Chat-GPT program, GPT-3 is a so-called “large language model” that builds coherent responses through word association. Essentially, it’s a much more advanced version of hitting the suggested words on your keyboard over and over again. It’s a flashy application of AI, and it’s probably Turing-complete on social media. So yeah, I’ll admit that it’s pretty cool.

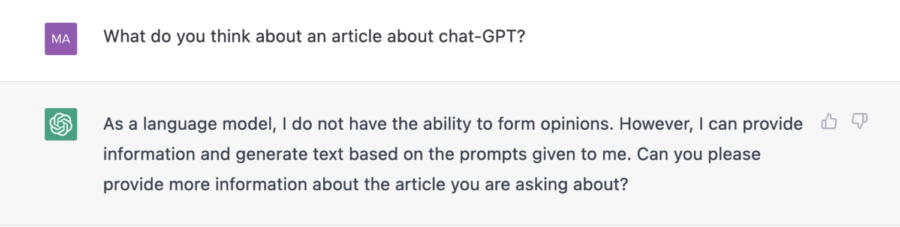

My main issue with Chat-GPT is very reason it works so well: the GPT-3 language model is basically an advanced BS generator. It is trained to figure out things that sound good together, regardless of their accuracy or merit. And when you have something like that, trained through the cesspool of our collective internet posts, it can spew out some pretty horrible things: conspiracy theories, racist stereotypes, you name it. OpenAI recognizes this flaw and doesn’t allow Chat-GPT to generate responses related to certain topics—I admire that they’ve drawn lines in the sand for this, and it does make me happy that some tech companies are thinking about ethical practice.

But back to the AI. The greatest strength of Chat-GPT is its adaptability: it can alter the style or length of its responses to accommodate the prompts that it is given. Tell it to write in the style of Shakespeare, and it’ll spit out something you could drop in the middle of Romeo and Juliet. As for the quality of the writing—well, after reading some of the sample responses and hearing about how it has been used to generate scientific perspective articles, I’d say you’re better off writing your own essays.

All this has me feeling curious about what future AI might have in writing or education. With enough training and guidance, would it be possible to build a program that could write a class or deliver pertinent feedback on writing? With all the possibilities that AI presents, I’m starting to wonder whether nor not it should fill a role, not whether or not it can.

![Image credit to [puamelia]](https://memorialswordandshield.com/wp-content/uploads/2025/08/3435027358_ef87531f0b_o-1200x803.jpg)